The main result of software testing activities is finding bugs that are also called defects or incidents. Besides correcting them, what could you do with the information that they provide? In this extract from her book “Guide to Advanced Software Testing”, Anne Mette Hass discusses how you can define and use metrics from your bug tracking activities to better understand your software testing efforts and software development process.

There is no reason to collect information about incidents or defects if it is not going to be used for anything. On the other hand, information that can be extracted from incident reports is essential for a number of people in the organization, including test management, project management, project participants, process improvement people, and organizational management.

If you are involved in the definition of incident reports, then ask these people what they need to know—and inspire them, if they do not yet have any wishes. The primary areas for which incident report information can be used are:

* Estimation and progress;

* Incident distribution;

* Effectiveness of quality assurance activities;

* Ideas for process improvement.

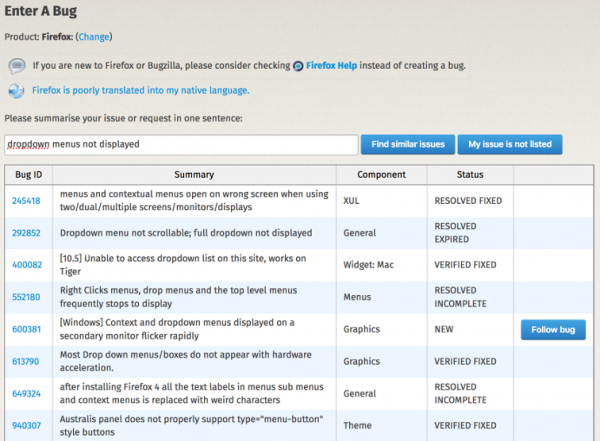

Bugzilla screen. Source: https://wiki.mozilla.org/BMO/How_to_Use_Bugzilla

Direct measures may be interesting, but it gets even more interesting when we use them for calculation of more complex measurements.

We don’t learn much if we are told that testing has found 543 faults. But if we know that we had estimated finding approximately 200, we have gained some food for thought.

Some of the direct measures we can extract from incident reports at any given time are:

* Total number of incidents;

* The number of open incidents;

* The number of closed incidents;

* The time it took to close each incident report;

* Which changes have been made since the last release.

The incidents can be counted for specific classifications, and this is where life gets so much easier if a defined classification scheme has been used.

Just to mention a few of the possibilities, we may want to count the number of:

* Incidents found during review of the requirements;

* Incidents found during component testing;

* Incidents where the source was a specification;

* Incident where the type was a data problem.

We can also get associated time information from the incident reports and use this in connection with some of the above measures.

For estimation and progress purposes we can compare the actual time it took to close an incident to our estimate, and get wiser and better at estimating next time. We can also look at the development in open and closed incidents over time and use that to estimate when the testing can be stopped.

For incident distribution we can determine how incidents are distributed over the components and areas in the design. This helps us identify the more fault-prone and, hence, high-risk areas. We can also determine incident distribution in relation to work product characteristics, such as size, complexity, or technology; or we can determine distribution in relation to development activities, severity, or type.

For information about effectiveness of quality assurance activities we can calculate the defect detection percentage (DDP) of various quality assurance activities as time goes by.

The DDP is the percentage of defects in an object found in a specific quality assurance activity. The DDP falls over time as more and more defects are detected. The DDP is usually given for a specific activity with an associated time frame; for example DDP for system test after 3 months’ use.

In the component test 75 defects are found. The DDP of the component test is 100% at the end of the component test activity (we have found all the defects we could). In the system test another 25 faults that could have been found in the component test are found. The DDP of the component test after system test can therefore be calculated to be only 75% (we only found 75% of what we now know we could have found).

The DDP may fall even further if more component test–related failures are reported from the customer.

The information extracted from incident reports may be used to analyze the entire course of the development and identify ideas for process improvement. Process improvement is, at least at the higher levels, concerned with defect prevention. We can analyze the information to detect trends and tendencies in the incidents, and identify ways to improve the processes to avoid making the same errors again and again; and to get a higher detection rate for those we do make.

About the Author

This article is taken from Anne Mette Hass book “Guide to Advanced Software Testing” and is reproduced here with permission from Artech House. Readers can buy this book with a 40% discount on http://us.artechhouse.com/Guide-to-Advanced-Software-Testing-Second-Edition-P1684.aspx using the code “MethodsAndTools2017” until the end of 2017. Anne Mette Hass is a Compliance Consultant at NNIT. She earned her M.Sc. in civil engineering from the technical University of Denmark and an M.B.A. in organizational behavior from Harriot Watt University, Edinburgh, as well as the ISEB Testing Practitioner Certificate.