Quotes on Software Testing: Load Testing, Unit Testing, Functional Testing

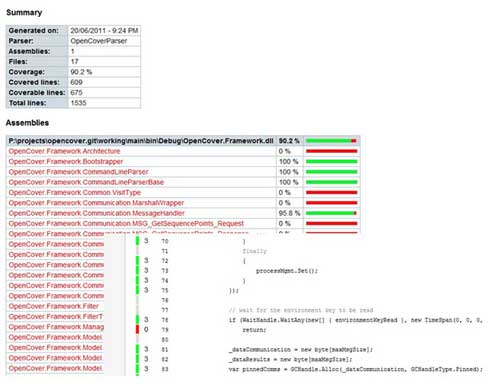

Code coverage is a metric that gives the degree to which the source code of a program is tested by a particular test suite. This metric is provided by open source or commercial code coverage tools and displayed in quality dashboards like SonarQube. There are many discussions about the right level of code coverage. In his book Quality Code, Stephen Vance explains the limit of this metric.

Without a full user-centered process, performing a usability test at the end of the development process merely serves to highlight the unacceptable nature of the design. It’s a sad and frustrating result, but there is usually little that can be done except to release the poor design.

When discussing TDD with my friends and coworkers, often heatedly, an interesting pattern has appeared. All of the arguments about expensive refactoring and the need for up-front design are never really challenged. Instead, what has always been the final refuge for those arguing for TDD is that it makes development fun again.

A Reflection on Up-Front Testing As we were saying, up-front testing really isn’t testing at all. It is really up-front design through the analysis of our tests. Can we take this testing even further? When XP came out and suggested doing unit tests, many of us realized that if we combined a series of unit tests together, we could get the equivalent of automated acceptance testing.

What main factors contribute to success in test automation? What common factors most often lead to the failure of an automation effort? There are no simple universal answers to these questions, but some common elements exist. We believe that two of the most important elements are management issues and the testware architecture:

Testing is often seen as a surrogate for quality and if you ask a developer what he is doing about quality, “testing” is often the answer. But testing is not about quality. Quality has to be built in, not bolted on, and as such, quality is a developer task. Period. This brings us to fatal flaw number 1: Testers have become a crutch for developers. The less we make them think about testing and the easier we make testing, the less testing they will do.[…] When testing becomes a service that enables developers to not think about it, then they will not think about it. Testing should involve some pain. It should involve some concern on the part of developers. To the extent that we have made testing too easy, we have made developers too lazy. The fact that testing is a separate organization at Google exacerbates this problem. Quality is not only someone else’s problem; it is another organization’s problem. Like my lawn service, the responsible party is easy to identify and easy to blame when something goes wrong. The second fatal flaw is also related to developers and testers separated by organizational boundaries. Testers identify with their role and not their product. Whenever the focus is not on the product, the product suffers. After all, the ultimate purpose of software development is to build a product, not to code a product, not to test a product, not to document a product. Every role an engineer performs is in […]

“As we were saying, up-front testing really isn’t testing at all. It is really up-front design through the analysis of our tests. Can we take this testing even further? When XP came out and suggested doing unit tests, many of us realized that if we combined a series of unit tests together, we could get the equivalent of automated acceptance testing.”